Vespa Newsletter, April 2024

In the previous update, we mentioned the YQL IN operator, fuzzy and regexp matching in streaming search, Match-features, and parameter substitution in the embed function. Today, we’re excited to share the following updates:

Vespa SPLADE Embedder

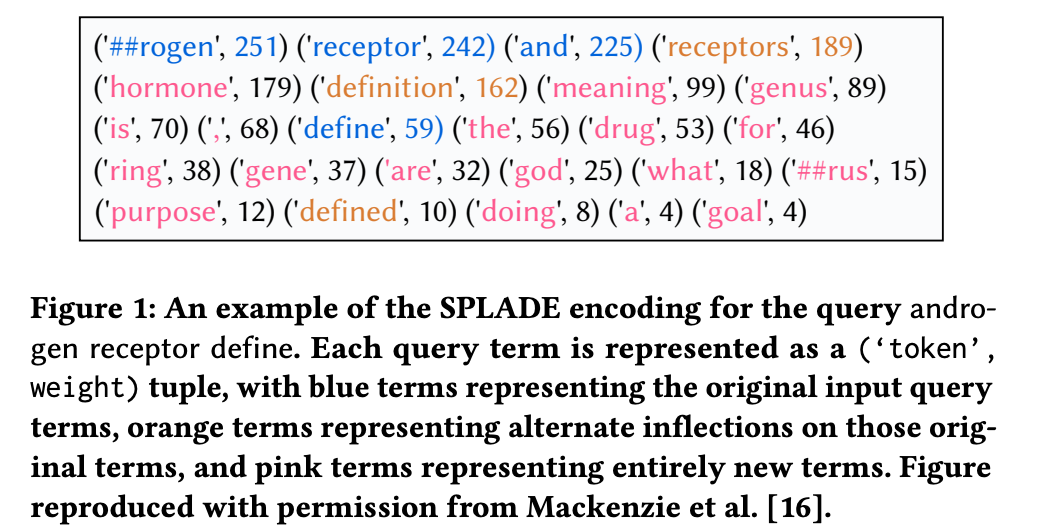

The SPLADE (SParse Lexical AnD Expansion) model is a highly effective approach to learned sparse retrieval, where queries and documents are represented by term impact scores derived from large language models. We recommend reading Joel Mackenzie et al.’s paper Exploring the Representation Power of SPLADE Models - example from the paper:

Since Vespa 8.321, the new SPLADE embedder supports SPLADE models. It maps text to a single-dimensional mapped tensor with the subword token as the cell label and the “impact” weight as the cell value. The tensor can then be used in ranking. Read more.

ONNX models with float16

Since Vespa 8.325, you can use half-precision floating-point (float16) ONNX models with the Vespa hugging-face-embedder and colbert-embedder:

<component id="mixbread" type="hugging-face-embedder">

<transformer-model url="https://huggingface.co/mixedbread-ai/mxbai-embed-large-v1/resolve/main/onnx/model_fp16.onnx"/>

<tokenizer-model url="https://huggingface.co/mixedbread-ai/mxbai-embed-large-v1/raw/main/tokenizer.json"/>

<pooling-strategy>cls</pooling-strategy>

</component>

This increases inference performance by 3x compared to float32 when using a GPU.

New guides for using Cohere embedding models

Cohere recently released a new embedding API, now featuring support for binary and int8 vectors - this is significant and enables cost savings and performance improvements - read more in Scaling vector search using Cohere binary embeddings and Vespa.

We have built three comprehensive guides on using the new Cohere embedding models with Vespa:

embed-english-v3.0with compact binary representation: cohere-binary-vectors-in-vespa-cloud.htmlembed-english-v3.0with two vector representations: billion-scale-vector-search-with-cohere-embeddings-cloud.htmlembed-multilingual-v3- multilingual hybrid search: multilingual-multi-vector-reps-with-cohere-cloud.html

Long-Context ColBERT

Since Vespa 8.299, the colbert-embedder accepts an array of strings in addition to the single string field previously supported. Details are in #30071. This makes it easier to build multi-paragraph ColBERT versions by inputting the paragraph as array elements - see the blog post for practical examples and more information.

New posts from our blog

You may have missed some of these new posts since the last newsletter:

- Embedding flexibility in Vespa

- Announcing Vespa Long-Context ColBERT

- The Singaporean government deploys state of the art semantic search

- Scaling vector search using Cohere binary embeddings and Vespa

- Perspectives on R in RAG

Other companies blogging about how and why they build on Vespa

- From the marqo blog by Farshid Zavareh: Marqo V2: Performance at Scale, Predictability, and Control. This article lets you dive into the second version of this open source platform and discover how it addresses the limitations of its predecessor, leveraging Marqo’s inference engine in concert with Vespa for unparalleled performance in large-scale, high-throughput semantic search applications.

- From FARFETCH Tech by Ricardo Rossi Tegão: Scaling Recommenders systems with Vespa. Our team successfully implemented the entire recommendation process of one algorithm with Vespa, matching the latency requirements (provide recommendations under 100ms) and scalability needs.

- From the Stanby Tech Blog by Satoshi Takatori: Migrating to the Vespa Search Engine. Migrating Elastic and Solr solutions to Vespa.

Upcoming meetups and conferences

- AICamp Berlin, Paris, London: Improving the Usefulness of LLMs with RAG

- SW2 Conference Denver: Building Something Real with Retrieval Augmented Generation (RAG)

- Infoshare 24 Gdansk: Building Something Real with Retrieval Augmented Generation (RAG)

Thanks for reading! Try out Vespa by deploying an application for free to Vespa Cloud.