Simplify Search with Multilingual Embedding Models

Photo by Bruno Martins on Unsplash

This blog post presents and shows how to represent a robust multilingual embedding model of the E5 family in Vespa. We also demonstrate how to evaluate the model’s effectiveness on multilingual information retrieval (IR) datasets.

Introduction

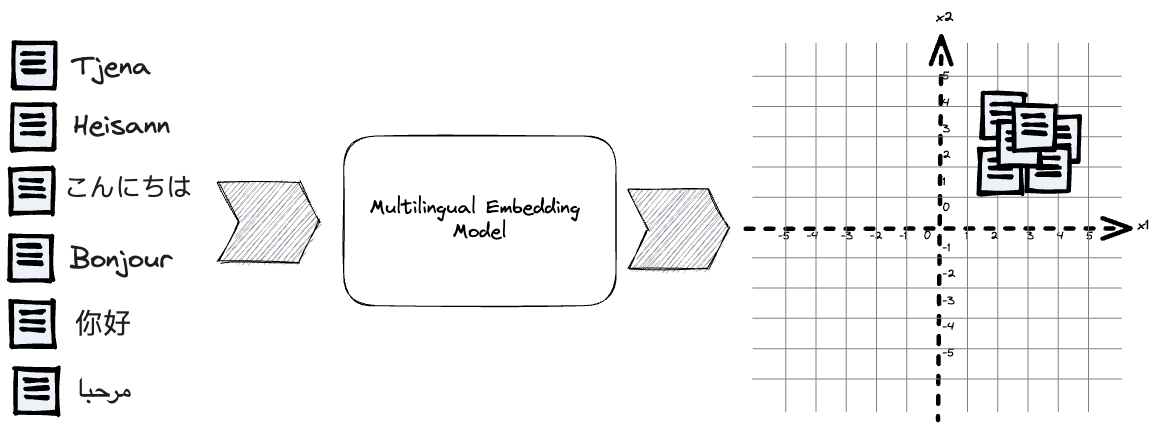

The fundamental concept behind embedding models is transforming textual data into a continuous vector space, wherein similar items are brought close together and dissimilar ones are pushed farther apart. Mapping multilingual texts into a unified vector embedding space makes it possible to represent and compare queries and documents from various languages within this shared space.

Meet the E5 family.

Researchers from Microsoft introduced the E5 family of text embedding models in the paper Text Embeddings by Weakly-Supervised Contrastive Pre-training. E5 is short for EmbEddings from bidirEctional Encoder rEpresentations. Using a permissive MIT license, the same researchers have also published the model weights on the Huggingface model hub. There are three multilingual E5 embedding model variants with different model sizes and embedding dimensionality. All three models are initialized from pre-trained transformer models with trained text vocabularies that handle up to 100 languages.

This model is initialized from xlm-roberta-base and continually trained on a mixture of multilingual datasets. It supports 100 languages from xlm-roberta, but low-resource languages may see performance degradation._

Similarly, the E5 embedding model family includes three variants trained only on English datasets.

Choose your E5 Fighter

The embedding model variants allow developers to trade effectiveness versus serving related costs. Embedding model size and embedding dimensionality impact task accuracy, model inference, nearest neighbor search, and storage cost.

These serving-related costs are all roughly linear with model size and embedding dimensionality. In other words, using an embedding model with 768 dimensions instead of 384 increases embedding storage by 2x and nearest neighbor search compute with 2x. Accuracy, however, is not nearly linear, as demonstrated on the MTEB leaderboard.

The nearest neighbor search for embedding-based retrieval could be accelerated by introducing approximate algorithms like HNSW. HNSW significantly reduces distance calculations at query time but also introduces degraded retrieval accuracy because the search is approximate. Still, the same linear relationship between embedding dimensionality and distance compute complexity holds.

| Model | Dimensionality | Model params (M) | Accuracy Average (56 datasets) | Accuracy Retrieval (15 datasets) |

| Small | 384 | 118 | 57.87 | 46.64 |

| Base | 768 | 278 | 59.45 | 48.88 |

| Large | 1024 | 560 | 61.5 | 51.43 |

Comparision of the E5 multilingual models. Accuracy numbers from MTEB leaderboard.

Do note that the datasets included in MTEB are biased towards English datasets, which means that the reported retrieval performance might not match up with observed accuracy on private datasets, especially for low-resource languages.

Representing E5 embedding models in Vespa

Vespa’s vector search and embedding inference support allows developers to build multilingual semantic search applications without managing separate systems for embedding inference and vector search over the multilingual embedding representations.

In the following sections, we use the small E5 multilingual variant, which gives us reasonable accuracy for a much lower cost than the larger sister E5 variants. The small model inference complexity also makes it servable on CPU architecture, allowing iterations and development locally without managing GPU-related infrastructure complexity.

Exporting E5 to ONNX format for accelerated model inference

To export the embedding model from the Huggingface model hub to ONNX format for inference in Vespa, we can use the Optimum library:

$ optimum-cli export onnx --task feature-extraction --library transformers -m intfloat/multilingual-e5-small multilingual-e5-small-onnx

The above optimum-cli command exports the HF model to ONNX format that can be imported

and used with the Vespa Huggingface

embedder.

Using the Optimum generated ONNX file and tokenizer configuration

file, we configure Vespa with the following in the Vespa application

package

services.xml

file.

<component id="e5" type="hugging-face-embedder">

<transformer-model path="model/multilingual-e5-small.onnx"/>

<tokenizer-model path="model/tokenizer.json"/>

</component>

That’s it! These two simple steps are all we need to start using the multilingual E5 model to embed queries and documents with Vespa.

Using E5 with queries and documents in Vespa

The E5 family uses text instructions mixed with the input data to separate queries and documents. Instead of having two different models for queries and documents, the E5 family separates queries and documents by prepending the input with “query:” or “passage:”.

schema doc {

document doc {

field title type string { .. }

field text type string { .. }

}

field embedding type tensor<float>(x[384]) {

indexing {

"passage: " . input title . " " . input text | embed | attribute

}

}

The above Vespa schema language

uses the embed indexing

language

functionality to invoke the configured E5 embedding model, using a

concatenation of the “passage: “ instruction, the title, and

the text. Notice that the embedding tensor

field defines the embedding dimensionality (384).

The above schema uses a single vector representation per document. With Vespa multi-vector indexing, it’s also possible to represent and index multiple vector representations for the same tensor field.

Similarly, on the query, we can embed the input query text with the E5 model, now prepending the input user query with “query: “

{

"yql": "select ..",

"input.query(q)": "embed(query: the query to encode)",

}

Evaluation

To demonstrate how to evaluate multilingual embedding models, we evaluate the small E5 multilingual variant on three information retrieval (IR) datasets. We use the classic trec-covid dataset, a part of the BEIR benchmark, that we have written about in blog posts before. We also include two languages from the MIRACL (Multilingual Information Retrieval Across a Continuum of Languages) datasets.

All three datasets use NDCG@10 to evaluate ranking effectiveness. NDCG is a ranking metric that is precision-oriented and handles graded relevance judgments.

| Dataset | Included in E5 fine-tuning | Language | Documents | Queries | Relevance Judgments |

| BEIR:trec-covid | No | English | 171,332 | 50 | 66,336 |

| MIRACL:sw | Yes (The train split was used) | Swahili | 131,924 | 482 | 5092 |

| MIRACL:yo | No | Yoruba | 49,043 | 119 | 1188 |

IR dataset characteristics

We consider both BEIR:trec-covid and MIRACL:yo as out-of-domain datasets as E5 has not been trained or fine tuned on them since they don’t contain any training split. Applying E5 on out-of-domain datasets is called zero-shot, as no training examples (shots) are available.

The Swahili dataset could be categorized as an in-domain dataset as E5 has been trained on the train split of the dataset. All three datasets have documents with titles and text fields. We use the concatenation strategy described in previous sections, inputting both title and text to the embedding model.

We evaluate the E5 model using exact nearest neighbor search without HNSW indexing, and all experiments are run on an M1 Pro (arm64) laptop using the open-source Vespa container image. We contrast the E5 model results with Vespa BM25.

| Dataset | BM25 | Multilingual E5 (small) |

| MIRACL:sw | 0.4243 | 0.6755 |

| MIRACL:yo | 0.6831 | 0.4187 |

| BEIR:trec-covid | 0.6823 | 0.7139 |

Retrieval effectiveness for BM25 and E5 small (NDCG@10)

For BEIR:trec-covid, we also evaluated a hybrid combination of E5 and BM25, using a linear combination of the two scores, which lifted NDCG@10 to 0.7670. This aligns with previous findings, where hybrid combinations outperform each model used independently.

Summary

As demonstrated in the evaluation, multilingual embedding models can enhance and simplify building multilingual search applications and provide a solid baseline. Still, as we can see from the evaluation results, the simple and cheap Vespa BM25 ranking model outperformed the dense embedding model on the MIRACL Yoruba queries.

This result can largely be explained by the fact that the model had not been pre-trained on the language (low resource) or tuned for retrieval with Yoruba queries or documents. This is another reminder of what we wrote about in a blog post about improving zero-shot ranking, where we summarize with a quote from the BEIR paper, which evaluates multiple models in a zero-shot setting:

In-domain performance is not a good indicator for out-of-domain generalization. We observe that BM25 heavily underperforms neural approaches by 7-18 points on in-domain MS MARCO. However, BEIR reveals it to be a strong baseline for generalization and generally outperforming many other, more complex approaches. This stresses the point that retrieval methods must be evaluated on a broad range of datasets.

In the next blog post, we will look at ways to make embedding inference cheaper without sacrificing much retrieval effectiveness by optimizing the embedding model. Furthermore, we will show how to save 50% of embedding storage using Vespa’s support for bfloat16 precision instead of float, with close to zero impact on retrieval effectiveness.

If you want to reproduce the retrieval results, or get started with multilingual embedding search, check out the new multilingual search sample application.