RAG at Scale: Why Tensors Outperform Vectors in Real-World AI

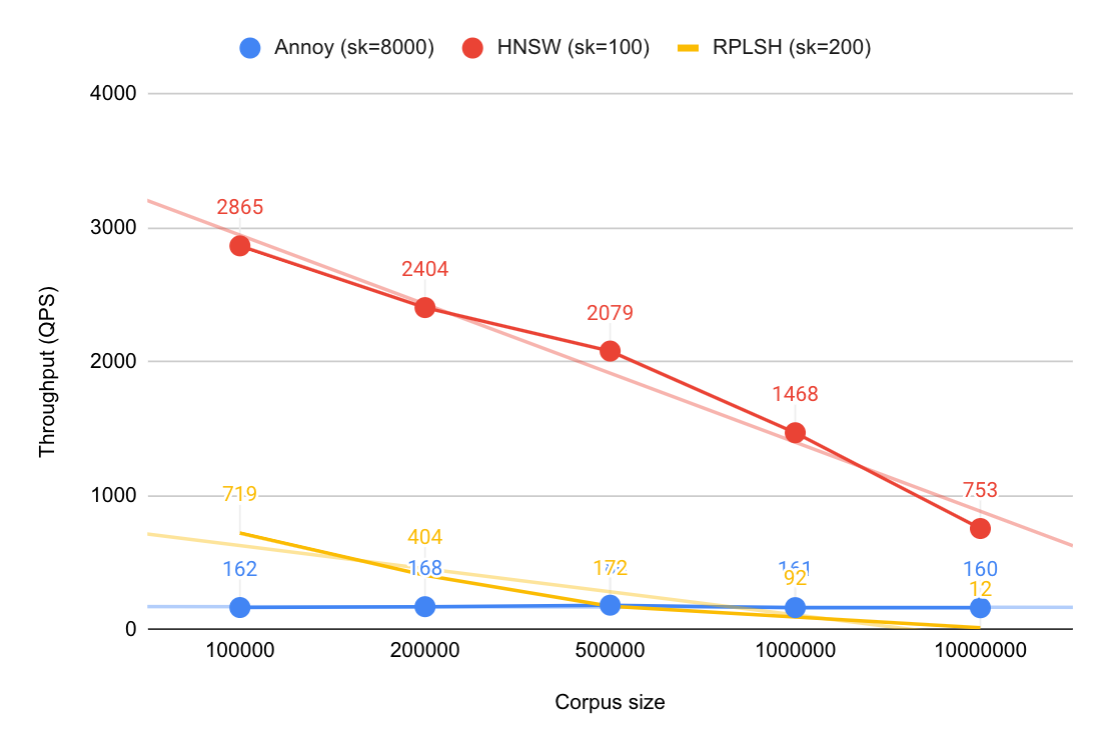

As AI applications evolve, it’s clear that semantic similarity alone isn’t enough. Vector databases are a foundational component of many modern AI systems, powering fast and scalable retrieval through techniques like approximate nearest neighbor (ANN) search to surface information based on similarity. But as retrieval-augmented generation (RAG) applications evolve, they increasingly require richer data representations that capture relationships within and across modalities, like text, images and video.

With this growing complexity, the limitations of basic vector representations are becoming harder to ignore:

- Lack of full-text search capabilities: Most vector databases can’t match on exact phrases, boolean logic or keyword expressions, leading to imprecise or incomplete results.

- Weak integration with structured data and business logic: Most vector databases struggle to combine unstructured content with filters, rules or metadata like price, date or category.

- Lack of support for custom ranking: Tailoring relevance scores to your domain through business rules or machine learning models can be difficult or impossible in many systems.

- No built-in machine learning inference: External re-rankers or classifiers introduce latency, complexity and failure points.

- Fragile real-time update pipelines: Many setups require awkward workarounds to keep indexes fresh, slowing down time to answer.

These limitations become especially problematic in applications requiring personalization, hybrid relevance scoring, real-time responsiveness and multimodal understanding. What’s needed is structure — a way to represent relationships within and across modalities in a form that’s both expressive and performant.

That’s where tensors come in.

While vectors and tensors are technically the same kind of object: both are numerical representations used in machine learning; a vector is simply a one-dimensional tensor. Tensors generalize that idea to multiple dimensions, enabling richer, more expressive representations.

Because tensors preserve critical context — sequence, position, relationships and modality-specific structure — this makes them far better suited for advanced retrieval tasks where precision and explainability matter.

Vectors vs. Tensors: A Quick Comparison

At a glance, vectors and tensors may look similar. But when it comes to expressing context and relationships, their capabilities diverge sharply:

| Data Type | Vector Representation | Tensor Representation |

|---|---|---|

| Text | x[386] |

tensor<bfloat16>(chunk{}, x[386]) |

| Image | v[128] |

tensor<float>(patch{}, v[128]) |

| Video | v[1024] |

tensor<float>(cam{}, vehicle{}, v[1024]) |

… where the vectors / numeric tensor dimensions look like [0.6, 0.8, 0.5, ...]

Vectors flatten the data, representing everything as a single embedding. Tensors retain structure, enabling:

-

Fine-grained retrieval, such as ranking image regions

-

Context-aware embeddings across modalities that preserve semantic and spatial relationships

-

Precise query interaction where similarity is just one of many dimensions considered

These capabilities make tensors the foundation for powering modern retrieval techniques like ColBERT, ColPali and temporal video search, all of which depend on comparing multiple embeddings per document, not just one.

Trying to replicate these capabilities with vectors alone leads to fragile architectures: external pipelines for reranking, disconnected model services for filtering and a patchwork of components that are costly to maintain and difficult to scale.

A Simplified Tensor Framework

In most machine learning libraries, tensors are treated as unstructured, implicitly ordered arrays with weak typing and inconsistent semantics. This can create major challenges in real-world applications:

-

Large, inconsistent APIs that slow down development

-

Separate logic for handling dense vs. sparse data

-

Limited optimization potential and hard-to-read, error-prone code

These limitations become especially painful in workloads involving hybrid data, multimodal inputs and complex ranking or inference pipelines.

To address these issues and make tensors more practical to use at scale, Vespa developed a rigorously defined, strongly typed tensor formalism, designed for scalability, readability and precision in real-world applications. Unlike many ML frameworks that focus solely on model development, Vespa’s tensor system is also designed for high-performance serving in real-time production environments.

Vespa’s Tensor Formalism

Vespa’s tensor formalism is grounded in three core principles:

-

Unified support for dense and sparse dimensions: One consistent representation for all tensor types — no need to switch between formats or APIs.

-

Strong typing with named dimensions: Named dimensions act like semantic labels, enabling safer computation, clearer documentation and easier debugging of complex pipelines.

-

A minimal, composable set of tensor operations: Instead of bloated APIs, Vespa provides a concise mathematical foundation that is flexible enough to express advanced logic while remaining readable and maintainable.

The result is a cleaner, more expressive, and highly scalable tensor system optimized for everything from multimodal ranking to real-time inference.

1. Unified Support for Dense and Sparse Dimensions

Real-world AI workloads often require both dense and sparse data representations. Dense dimensions are ideal for fixed-size numeric vectors like sentence embeddings, image pixels or audio features, where every value is present and contributes to the result. Sparse dimensions, on the other hand, represent values across large, mostly empty spaces like one-hot encoded categories, symbolic tags, or user-item interaction histories. Many models rely on both simultaneously.

Vespa’s tensor formalism supports:

-

Mixing dense and sparse dimensions within a single tensor

-

Flexible computation across both types, with no need for separate handling or conversions

-

String-labeled sparse dimensions (such as

"feature:color:red"), enabling symbolic features directly in models

This makes it far easier to build AI systems that reflect real-world decision logic. You don’t need separate systems or logic for each type. For example, a shopping recommendation can combine semantic similarity between product descriptions (dense) with rule-based constraints like “only show laptops” or “prefer Apple” (sparse). All in one model, all in one query. See this product demo.

2. Strong Typing with Named Dimensions

Named dimensions give tensors semantic meaning, making them easier to read, debug, and reason about. Instead of relying on ambiguous axis positions like axis=0, developers can use clear, human-readable names like “batch,” “time” or “feature.”

Benefits of named dimensions include:

-

Improved readability and maintainability

-

Safer, verifiable tensor operations

-

Support for a clean, general-purpose function set

This type safety also prevents subtle bugs, like mistakenly combining two tensors along the wrong axis. With named dimensions, the system can detect and prevent mismatches before they cause issues.

3. A Minimal, Composable Set of Tensor Operations

By grounding computation in a small, closed set of core tensor operations, Vespa makes the tensor system both expressive and manageable. These operations serve as building blocks for even the most advanced logic, without introducing unnecessary complexity.

This design leads to:

-

Interoperability: Any system that supports this core set can fully participate in tensor computation.

-

Optimization: Performance tuning can focus on a limited number of operations, while high-level use cases compose them as needed.

The result is a framework that combines mathematical rigor with real-world scalability, ideal for powering hybrid search, personalized ranking, and multimodal AI at production scale.

Why the Future of AI Applications Belongs to Tensors

Vector search has been a powerful enabler, but as applications grow more complex, dynamic and multimodal, vectors are no longer sufficient. Tensors provide the foundation that vector-only systems lack. If vectors help retrieve, tensors help reason.

Unlike flat vectors, tensors preserve structure, enable hybrid logic and support meaningful computation across diverse data types. With Vespa’s production-ready tensor framework, organizations can seamlessly integrate dense and sparse data, personalize experiences at scale and make real-time, context-aware decisions, all within one high-performance platform.

Conclusion: Supporting Symbolic and Semantic Search Natively

The limitations of today’s vector-first systems are becoming increasingly clear. Bolting vector search onto Lucene, or full-text search onto vector databases, leads to fractured architectures that can’t meet the demands of modern AI applications.

Vespa offers a fundamentally different path forward. Built from the ground up to unify symbolic and semantic search, Vespa lets developers express complex logic in a single query, blending keyword matches, structured filters, phrase constraints, vector similarity and rich tensor-based reasoning. You don’t have to compromise on precision or performance or worry about pipeline fragility with external re-rankers.

And with Vespa’s production-ready tensor formalism, you’re not limited to flat embeddings. You can represent and retrieve fine-grained relationships within and across modalities, whether it’s a product in an image, a timestamp in a video, or a legal clause in a contract.

If vectors help you retrieve, tensors help you reason. And Vespa gives you both alongside real-time inference, symbolic control, and scalable infrastructure that’s already powering advanced AI applications in production, like Perplexity and Spotify.

The next generation of AI isn’t just about finding relevant data. It’s about making sense of it. Vespa is the engine built for that future.

Want to see it in action? Follow The RAG Blueprint to build a RAG system using Vespa’s tensor framework, or learn more about tensors here.

Read more