LLMs, Vespa, and a side of Summer Debugging

Note: Since writing this post, the two MCP server components mentioned below have been refactored into regular Java classes for simplicity. The overall functionality and architecture remain the same.

The Beginning: Learning Vespa and MCP

During our first few days at Vespa, we spent time working through the getting-started tutorials. The learning curve was steep, but it gave us a solid foundation for understanding the core concepts of how to use Vespa effectively. With the basics in place, we turned our attention to the summer project: the MCP server. Like many students, our first instinct was to ask an LLM, “What is an MCP server?” Somewhat amusingly, the initial response described a Minecraft server. This moment highlighted how new the concept is, and how unfamiliar it probably remains for most people. After some further digging, we found ourselves with a long list of questions we needed to answer:

- What is an MCP server, and how does it actually work?

- How can we integrate it into Vespa?

- What programming language should we use?

- What capabilities should we provide through the MCP server?

- How can we make the MCP server generic enough to work with any Vespa application, regardless of its fields, rank profiles, and so on?

- Are there any important security considerations?

What Even Is an MCP Server?

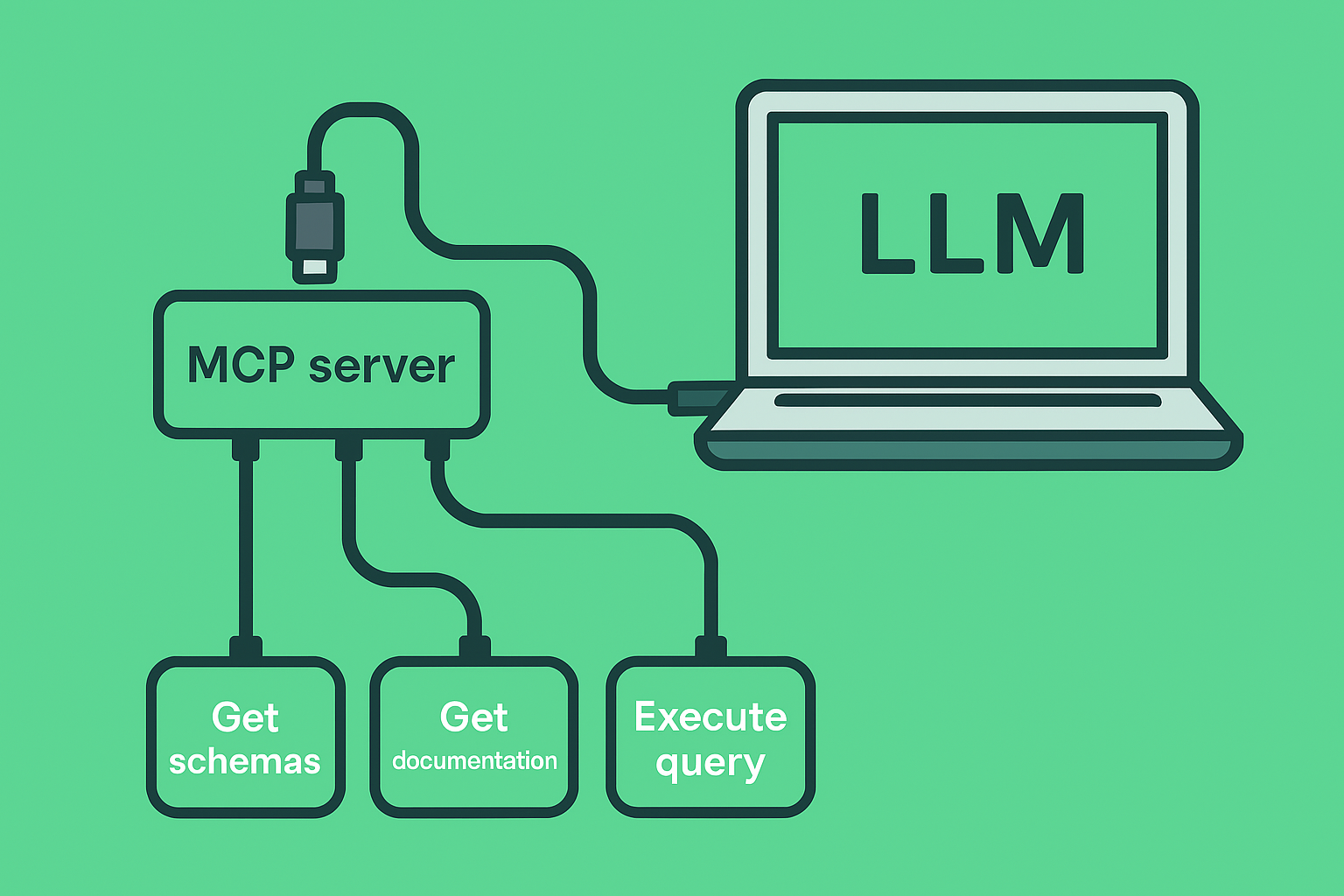

The Model Context Protocol (MCP) standardizes how applications provide context to large language models. The standard is developed by Anthropic, and the architecture is made up of three core parts:

MCP Host– The AI application that coordinates and manages one or more MCP clients.MCP Client– Connects to an MCP server and retrieves context for the host to use.MCP Server– The server that provides context and tools to MCP clients.

For our case, the server could enable three main types of capabilities:

Tools– Executable functions that allow LLMs to perform actions or retrieve information (model-controlled).Resources– Provide additional context to the LLM through structured data or content (application-controlled).Prompts– Predefined templates or instructions that guide LLM interactions.

If anyone’s interested in more detailed information about MCP, the official website is the place to go. Our goal was to develop an MCP server that connects to a running Vespa application and enables specific capabilities.

First Milestone: The Python Prototype

At the beginning, we decided to simplify the project as much as possible. We planned to develop a standalone MCP server that could communicate with both an MCP client and a Vespa application. This meant that the MCP server would communicate with the client through standard input and output, and with the Vespa application through Vespa’s Python API.

We started by developing an MCP server using PyVespa and the official Python MCP SDK. Through the MCP server, we wanted to connect to an existing Vespa application, either locally or in the cloud, and enable an LLM to run queries through the MCP client. Seeing as the Model Context Protocol is developed by Anthropic, we decided to use their very own Claude Desktop as the host to explore and develop our server.

It quickly became clear that we needed three main tools to effectively query a Vespa application through an LLM:

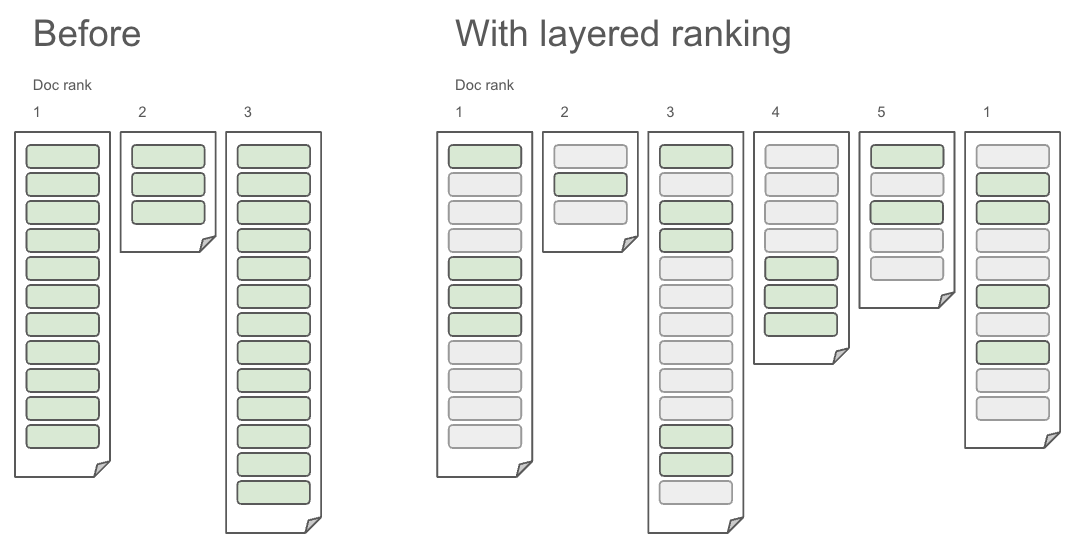

A schema tool– to retrieve information about the Vespa schema, such as fields, rank profiles, so the LLM has the necessary context.A documentation tool– to search the official Vespa documentation for relevant query syntax, ranking features, and more. This leverages the public documentation API, which uses hybrid search to retrieve relevant content.A query tool– to run queries against the Vespa application and return the responses to the LLM. After a couple of weeks, we had a functional MCP server that could retrieve schemas, search documentation, and run queries to fetch data. This was a satisfying milestone - everything worked surprisingly well, and development progressed faster than expected.

The Real Challenge: Container Integration

Now it was time to take on the more challenging task: how could we integrate the MCP server as part of the Vespa container itself? After some back and forth, it became clear that the solution would be significantly more complex than the standalone MCP server — and far from trivial.

Since the MCP server was likely to be integrated as part of the Vespa Container, the implementation had to be written in Java. This was new to both of us, but after a quick crash course, we began developing the MCP server using the official Java MCP SDK — without any frameworks like Spring - and used the ExecutionFactory class to invoke Vespa queries directly from a component.

Building a Vespa Component

As previously mentioned, our goal was to make the MCP server an integrated part of a fully functional Vespa app. To do this, we configured the server as a Vespa component - a Java class whose lifecycle is managed by the container. Components benefit from automatic dependency injection and seamless integration with Vespa’s internal APIs.

@Component

public class App extends AbstractComponent { // The MCP server implementation

@Inject

public App(VespaTools vespaTools) {

// Container automatically injects dependencies

}

}

With that configured, the MCP server starts automatically when the app deploys. This allows users to connect to it immediately without needing to run a separate service alongside Vespa.

Solving the Transport Puzzle

With the server inside the Vespa app, we needed a way to communicate with MCP clients. We implemented a custom request handler that exposes an /mcp/ endpoint and handles the communication between clients and our MCP server.

The MCP protocol supports different transport mechanisms. We started with HTTP+SSE (Server-Sent Events) since it was well-supported by Claude Desktop at the time. This worked by opening an SSE stream for responses while accepting POST requests for commands. However, as the SDK evolved, we switched to the newer Streamable HTTP transport to stay current with the protocol standards.

Another challenge came when the Vespa Team raised concerns about maintaining session state across multiple nodes. In a distributed system, if a session is created on one node and a subsequent request hits another node, the session won’t be found. To address this, we implemented a stateless version that sends all responses as single HTTP responses without maintaining sessions or SSE streams. This ensures the MCP server scales properly with Vespa’s distributed architecture.

Implementing Core Tools

After setting up the transport and request handler, we needed to figure out how to run queries from within the Vespa container itself, and the ExecutionFactory class turned out to be the key. It provided a straightforward way to construct and execute queries from inside a component, allowing us to retrieve schema information and handle responses using core Vespa objects that are initialized when the application is deployed.

We developed the same three tools as we had in the standalone MCP server. However, after many conversations with Claude Desktop, we noticed that the language model frequently needed to search the documentation and often required several attempts to construct a working query. To address this, we created a resource that provides the model with several example queries, and a simple tool that allows the LLM to read this resource when needed. Supplying these examples at the beginning of the conversation noticeably reduced the number of retries needed. We also added a prompt that lists the available tools along with their descriptions, so the user can easily get an overview of the server’s capabilities and how each tool can be used.

The result was an MCP server running inside the Vespa container that works well both locally and in the cloud. While the implementation may seem straightforward in hindsight, our experience was quite different. There was a lot of back and forth, and we encountered several unexpected situations along the way. It is safe to say that we have embarked on a lot more coffee-fueled debugging sessions than ever before, and read countless log messages. As MCP is still relatively new, we also had to adapt to frequent updates in the MCP SDK — some of which required us to rework parts of our implementation.

To make all of this work, we had to dive deep into both the MCP SDK and the Vespa engine codebase, reading documentation and studying internal logic to understand how to extend and integrate our server correctly. This process gave us valuable hands-on experience with building on top of complex and evolving systems, and strengthened our understanding of both Vespa and model-based architectures like MCP.

Seeing It in Action

In our experience, it can be a surprisingly helpful tool for several reasons. First and foremost, it allows you to query your Vespa application using natural language, making it easier to interact with the system—especially for those unfamiliar with Vespa’s query syntax.

Another useful capability is the documentation search tool, which enables the language model to retrieve relevant sections of the official Vespa documentation. This is helpful not only for quick explanations and follow-up questions, but also for guidance during development. We frequently used this tool ourselves when building and debugging our own Vespa components.

Lastly, the MCP server allows the LLM to retrieve schema information and data from the application, and then explain it in natural language. This makes it easier to understand both the structure of the application and the data it operates on, which is especially valuable in larger or unfamiliar setups.

What’s Next

The MCP server is fully functional - it can run various types of queries, search the Vespa documentation, and retrieve schema information. We’ve designed it as a read-only tool, focusing on safe exploration and querying, not enabling LLMs to modify data. When deploying to Vespa Cloud, a valid authorization token is also mandated to connect your MCP client with the server.

Looking ahead, we’re focusing on improving the interaction between the MCP server and Vespa applications. While the core functionality is in place, there are likely many additional capabilities that users will find useful, based on how they work with their applications.

Want to see the MCP server in action? Check out this sample app to explore how it interacts with Vespa.

Looking Back: Our Summer at Vespa.ai

So, how was the summer? Even though the project itself was very exciting and we learned a lot, the most valuable part - by far - was the opportunity to work at a place like Vespa.ai. Experiencing how top-tier engineers build complex systems in an agile and enjoyable environment gave us a unique chance to grow, both technically and personally. We were given a high degree of independence, which allowed us to experiment and learn continuously, while help and guidance were always just around the corner when we needed them.

We want to express our sincere gratitude to all our colleagues at Vespa.ai for giving us an outstanding summer internship experience. The tech startup environment is truly something unique, and we’ll carry the knowledge and insights we’ve gained with us.