Why a Search Platform (Not a Vector Database) is the Smarter Choice for AI Search

As AI-powered search requirements grow, companies face a critical choice: Should they use a dedicated vector database, a search platform with built-in vector capabilities, or their existing cloud database with add-on vector capabilities provided by the vendor? The right decision impacts search accuracy, scalability, and costs.

Cloud data platforms may already house some your data, but they may not access all of your content and often fall short in large-scale enterprise deployments of vector search. While vector databases specialize in similarity search, they aren’t a comprehensive search solution and often lack essential features like ranking, filtering, and hybrid search—capabilities that traditional search platforms already excel at.

In this blog, we’ll break down why search platforms with native vector support often provide the best balance of performance, flexibility, and cost-efficiency.

Cloud Data Platforms vs Vector Pure Plays

Unlike cloud data platforms optimized for exact matches, vector databases excel at storing vector embeddings and performing similarity searches, making them particularly well-suited for GenAI and LLM applications. Recognizing this shift, mainstream database vendors have moved quickly to incorporate vector search capabilities as a strategic play to capture AI-driven workloads. These platforms already house vast amounts of enterprise data and aim to power GenAI applications directly, but they historically lacked robust semantic search capabilities.

📝Key takeaway: Retrofitted vector search solutions may work for small-scale applications, but they lack the efficiency and scalability required for enterprise AI search.

Many vendors have acquired technology rather than building it from the ground up. For instance:

- Snowflake acquired Neeva (whose CEO later became Snowflake’s CEO) to bolster its vector search capabilities with Cortex Search.

- Databricks acquired Mosaic AI to enhance its AI-powered search capabilities.

Despite this push, these retrofitted solutions often fall short in high-performance, large-scale deployments. They were not designed with full tensor support but adapted to include vector storage, indexing, and querying.

📝Key takeaway: CIOs evaluating vector search solutions should assess whether these add-on features meet the demands of their AI workloads, if purpose-built search platforms offer a more scalable and performant alternative and how much of their data is covered, e.g., PDF files.

For example, products like Snowflake Cortex Search and Databricks Mosaic AI operate in batch-only mode, lack real-time capabilities, and offer limited support for embeddings and search. The ranking systems are essentially a “black box” and lack the precision for fine-tuned search results.

The Challenges of Standalone Vector Databases

Vector databases have become a game-changer for AI-powered search, recommendations, and similarity matching, but they come with significant tradeoffs. Businesses relying solely on vector databases may encounter scalability, performance, query flexibility, and cost challenges. Here’s a quick breakdown of these challenges:

Scalability Constraints

- Some vector databases lack true horizontal scaling (in data size and query load), complicating distributed deployments.

High Computational Costs

- Frequent updates might require reindexing, increasing operational costs.

- Many vector databases optimize for batch processing, making real-time updates costly.

Accuracy vs. Performance Tradeoffs

- Tuning hyperparameters like distance metrics and indexing methods can be difficult and require expertise.

- Limited support for complex queries and structured filtering can impact relevance.

Limited Query Capabilities

- Lack of support for complex joins of query terms and relational queries makes it more challenging to work with structured data.

- Filtering and ranking capabilities are often limited, restricting customization.

- Some vector databases require an external metadata store, adding system complexity.

Vendor Lock-In and Ecosystem Limitations

- Some vector databases are fully managed cloud services with no self-hosting option.

- APIs and SDKs differ across platforms, making migrations difficult.

- Some vector databases rely on specialized hardware (GPU/TPU), limiting deployment flexibility.

Why a Search Platform with Vector Capabilities is the Smarter Choice

A standalone vector database won’t inherently improve search experiences. While vector similarity search is powerful, it’s only one part of delivering relevant results. Without robust ranking, filtering, and hybrid search capabilities, results may be similar but not necessarily precise or useful for end users.

📝Key takeaway: Rather than adding another specialized system, leveraging a search platform with native vector support can streamline architecture, improve relevance, and enhance performance.

Here’s why a search platform with vector capabilities is often the better choice:

Vector Database vs. Search Platform: Key Differences

| Feature | Standalone Vector Database | Search Platform (e.g., Vespa.ai) |

| Hybrid Search (Keyword + Vector) | ❌ Often lacks keyword search | ✅ Combines keyword, vector, and structured search |

| Boolean Operations Combined with Vector Search | ❌ Often lacks this functionality | ✅ Enables combining query components with boolean operations |

| Advanced Ranking & Relevance Tuning | ❌ Limited or manual tuning | ✅ Includes BM25, LTR, and hybrid ranking |

| Filtering & Faceting | ⚠️ Basic or requires external metadata store | ✅ Native support for structured filtering & faceting |

| Scalability & Performance | ⚠️ May require specialized infrastructure | ✅ Optimized for large-scale, real-time search |

| Real-Time Indexing & Updates | ⚠️ Many require batch processing | ✅ Supports instant indexing & real-time queries |

| Flexible Deployment | ❌ Often cloud-only, vendor-locked | ✅ Works on-prem, cloud, and hybrid environments |

| AI Model Integration | ⚠️ Requires separate ML pipeline | ✅ Directly runs ML models within search engine |

How Vespa.ai Overcomes the Challenges of a Standalone Vector Database

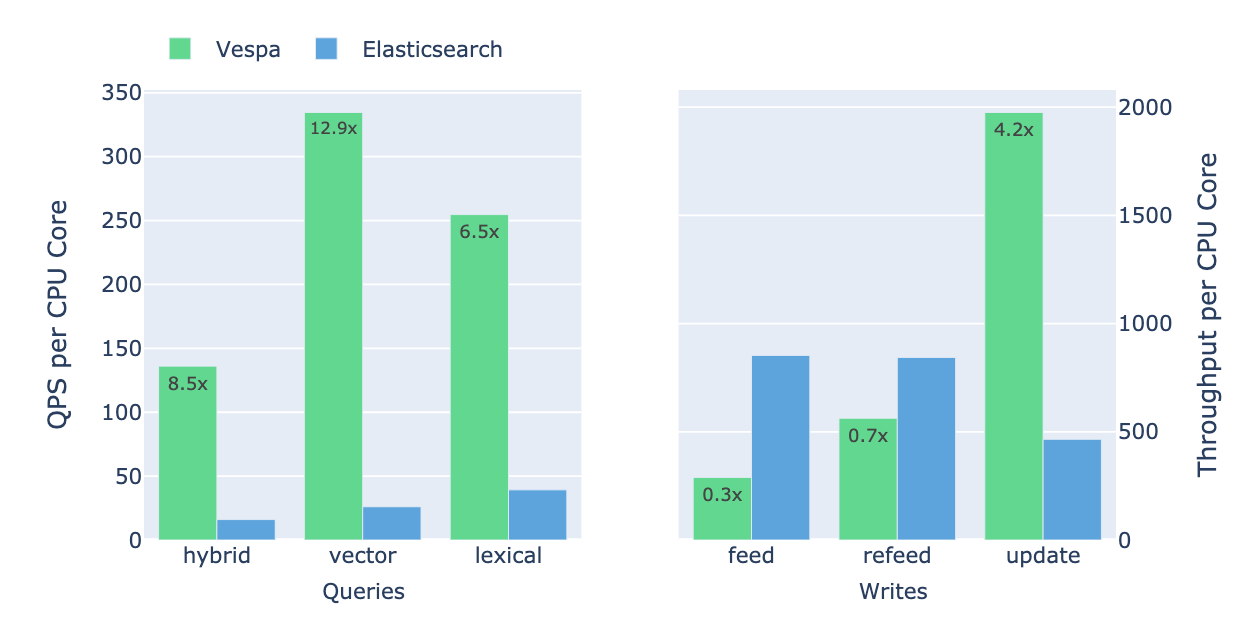

Not all search platforms are created equal. Since adding tensor support in 2014, Vespa.ai has evolved into a powerful, full-featured AI search and recommendation engine with unbeatable performance. A tensor is a generic data type that also includes vectors. Unlike standalone vector databases focusing solely on similarity search, Vespa.ai offers a fully integrated platform combining vector search, multi-phased ranking, and large-scale AI inference, ensuring more accurate, scalable, and cost-efficient search solutions.

How Vespa Stands Out

Scalable, Real-time AI-Powered Search

- Native distributed architecture enables seamless multi-node scaling for large datasets.

- Efficient real-time indexing minimizes update delays, ensuring fast and accurate search results.

Lower Costs & Smarter Query Execution

- Processes data in place, eliminating costly data movement.

- Optimizes vector search, keyword search, and structured filtering for cost-effective performance.

- Supports flexible deployment across on-prem, cloud, and hybrid environments, preventing vendor lock-in.

High-Precision Search

- Native AI inference allows direct machine learned model execution within the search engine.

- Hybrid ranking models combine keyword relevance, vector similarity, and AI-driven ranking for superior accuracy.

- Multi-phased ranking ensures improved search precision and computational cost control.

Advanced Query Capabilities & Hybrid Search

- Full support for structured queries, filtering, and full-text search for precise results.

- Robust metadata filtering and multi-condition queries enhance search and recommendation workflows.

No Cold-Start Problems & Continuous Indexing

- Instant indexing ensures new data is available and searchable in real time.

- Dynamic embedding generation prevents cold-start issues for new users and items.

- Incremental updates to model input refine AI-powered search to align with evolving user behavior.

Real-World Example: Vinted’s Transition to Vespa.ai

Vinted, Europe’s largest online C2C marketplace for second-hand fashion, faced challenges as its platform grew. The increasing data volume and query complexity led to high operational costs and the need for frequent hardware upgrades. To address these issues, Vinted migrated to Vespa.ai in 2023.

Key Outcomes of the Migration:

- Reduced Infrastructure: Vinted successfully halved the required servers, decreasing from six Elasticsearch clusters to one Vespa cluster.

- Enhanced Performance: The migration improved search latency 2.5x and indexing latency 3x. Additionally, the time it took for changes to reflect in search results dropped from 300 seconds to just 5 seconds.

- Improved Search Relevance: Vinted enhanced the relevance of search results by increasing the ranking depth by over three times (up to 200,000 candidate items), leading to a better user experience.

- Cost Efficiency: The transition to Vespa.ai led to a total cost of ownership approximately half that of the previous system, offering a cost-effective solution that simultaneously boosted user engagement and conversions.

Vinted’s experience underscores the advantages of adopting a search platform with integrated vector capabilities. By leveraging Vespa.ai, they significantly improved scalability, performance, and cost-efficiency.

The Bottom Line: Search Platforms Provide More than Just Similarity Search

Choosing the right tool isn’t about following trends but effectively meeting your search needs. A dedicated vector database might be useful for certain niche use cases, but it should complement—not replace—your search platform.

"Vector search boosts recall, while traditional search improves precision. The best approach? A hybrid search that balances both for the most effective search experience."

Kamran Khan

CEO, Pure Insights

A search platform with native vector support, like Vespa.ai, gives you the best of both worlds: vector similarity search along with advanced ranking, filtering, and scalability.

Want to power AI search with real-time, scalable ranking? Start your Vespa.ai trial now.