Why Life Sciences AI Is a Search Problem (Part 4 of 5)

Photo by Ousa Chea on Unsplash

The future of GenAI in pharma and healthcare isn’t about building bigger models — it’s about smarter retrieval.

By Harini Gopalakrishnan, Head of GTM, Vespa.ai

Originally presented at the Fierce Pharma Webinar: “You Have the Model, Now What? Lessons on Making AI Work in Life Sciences and Breaking the Chatbot Mirage.” This is a five part quick read series that summarizes the panel discussion highlighting key topics of the conversations..

→ Full video: fierce-pharma-webinar

In Healthcare AI: Context Is Everything

Having covered the lifesciences value chain, we move onto Healthcare where we will look at both the Payer and Health systems.

Providers: Multi Modality offers the real perspective

The question from a Provider perspective was about the real needs for physicians to leverage and embrace the multi modality of their rich patient data universe to help them make informed care decisions.

“In healthcare, the richest insights often are in the intersection of structured claims, unstructured notes, imaging data, and what we call the kitchen drawer of EHRs, which is the mixed media folder”

Dr. Salim Afshar, AI Innovation lead at Harvard Medical school and co-founder of Reveal HealthTech, emphasized that the richest insights in healthcare lie at the intersection of structured, unstructured, and imaging data.

“Language alone can’t delineate disease — the signal lives in the images and the relationships between data types.”

Future retrieval systems should unify multimodal data and surface real-time insights for clinicians. He gave the example of lung cancer subtypes that can only be distinguished via imaging patterns, not textual pathology notes alone. The CNN models have advanced sufficiently where one can reasonably perform segmentation on pathology slides to do classification of cancer subtypes - however the information of this can be stored as context along with other patient metadata for future intelligence.

Retrospective analysis of this data would be beneficial not only for care decisions or identifications of disease trends but also help get patients into trials faster based on specific criteria that don’t just encompass structured clinical characteristics.

Key Takeaways

-

Retrieval across modalities (notes + images + labs) enables true precision medicine.

-

AI should co-accompany clinicians, organizing complexity rather than dictating care.

-

Real-time retrieval can inform decisions at the point of care.

Payers: Personalization Through Context

The future: Moving from “AI as a tool” to “learning to work with AI”—asking the right next question based on persona and context

Dr. Salim highlighted that for payers, the biggest challenge in using AI lies in the lack of context — data is siloed, hard to retrieve, and therefore limits meaningful personalization.

Most care-management programs today apply generic plans to new members, rather than dynamically tailoring engagement.

In an analogy akin to consumer platforms like Netflix or Spotify: personalization works when the system knows the user’s profile, history, and intent. Similarly, healthcare payers could use retrieval and similarity search to understand each member’s context, compare them with look-alike patients, and adjust recommendations or care plans in real time.

This is a learning loop, not a static process — the more questions the system asks and the more it learns, the smarter its recommendations become.

Key Takeaways

-

Payer personalization fails today because contextual data is siloed and not retrievable.

-

Dynamic engagement should mimic consumer personalization models — asking the next best question based on prior behavior and similar profiles.

-

Retrieval-based AI enables continuous learning and adaptive risk stratification, turning static care plans into evolving, data-driven experiences.

The Demo

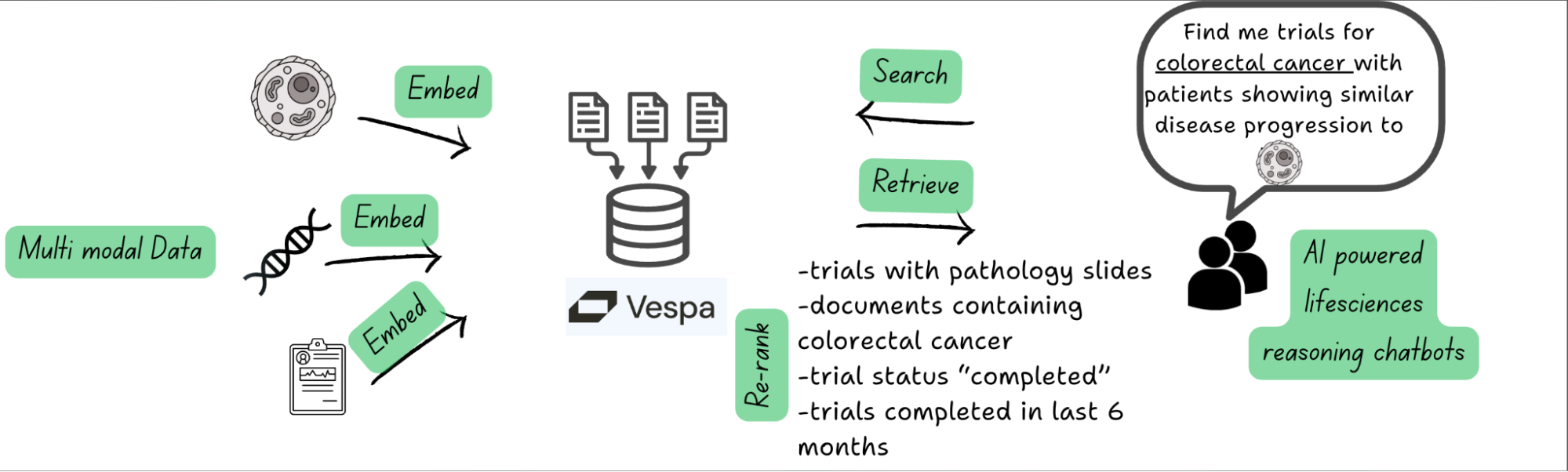

The session concluded with Biocanvas, a multimodal retrieval engine built on Vespa.ai by Reveal Healthtech. By embedding structured (lab values), unstructured (clinical notes), and visual (CT/MRI) data into a shared tensor space, researchers could simply query:

“Find patients aged 25–45 with brain scans showing similar tumor patterns and disease progression.”

In seconds, Biocanvas built on Vespa.ai was able to retrieve explainable, traceable cohorts — collapsing months of manual trial matching into minutes.

Key Takeaways

-

Tensor embeddings unify multimodal data for natural-language search.

-

Explainable and auditable retrieval builds compliance and confidence.

-

Real-time contextualization turns fragmented data into discovery.