Nexla + Vespa, The Power Duo for AI-Ready Data Pipelines

Partner Spotlight: Nexla

AI is transforming quickly. What started with Q&A chatbots has already evolved into deep research applications and, now, autonomous AI agents. Vespa is proud to be at the center of this shift, enabling some of the most proficient adopters of AI, such as Perplexity. To help organizations maximize the benefits of Vespa, we’re building a robust partner ecosystem. These partners help bring Vespa’s AI-native capabilities into real-world deployments across industries.

Meet the innovators shaping the future of AI. Today’s spotlight: Nexla

Nexla + Vespa.ai: The Power Duo for AI-Ready Data Pipelines

When AI systems fall short, it’s rarely the model’s fault. It’s the messy reality of data spread across systems and never quite staying in sync. That’s why Nexla and Vespa partnered together.

Nexla makes data usable.

Vespa makes data intelligent at scale.

Together, they turn messy, distributed enterprise data into real-time AI search, recommendation, and RAG systems, without months of custom code gluing things together.

Nexla: Making Enterprise Data Usable

Nexla is an enterprise-grade, AI-powered data integration platform that turns raw data from any source into production-ready data products. It provides a declarative, no-code way to move, transform, and validate data across ETL/ELT, reverse ETL, streaming, APIs, and RAG pipelines.

Think of Nexla as the layer that answers: “How do we reliably get the right data, in the right shape, to the systems that need it?

Core capabilities:

-

500+ Bidirectional Connectors: Pull data from databases, APIs, cloud storage, SaaS apps, and data warehouses, including systems like Salesforce, Snowflake, and Amazon S3.

-

Metadata Intelligence: Nexla automatically scans sources and generates Nexsets, virtual, ready-to-use data products with schemas, samples, and validation rules. Example: If a price field suddenly switches from numeric to string, Nexla detects it before bad data reaches production search.

-

Express (conversational pipelines): A conversational AI interface where you can simply describe what you need. Example: You can say, “Pull customer data from Salesforce and merge with Google Analytics,” and it builds the pipeline for you.

-

Universal integration styles: Supports ELT, ETL, CDC, R-ETL, streaming, API integration, and FTP in a single platform.

Nexla processes over 1 trillion records monthly for companies like DoorDash, LinkedIn, Carrier, and LiveRamp.

Vespa: Where Retrieval Becomes Reasoning

Vespa is a production-grade AI search platform that combines a distributed text search, vector search, structured filtering, and machine-learned ranking in a single system.

Think of Vespa as the engine that answers: “Given all this data, how do we retrieve, rank, and reason over it in real time?”

It powers demanding applications like Perplexity and supports search, recommendations, personalization, and RAG at massive scale.

Core capabilities:

-

Unified AI Search and Retrieval: Vespa natively combines vector and tensor search for semantic retrieval, full-text search for precise keyword matching, and structured filtering on attributes like categories, prices, and dates to enable richer, contextual search without stitching multiple systems together.

-

Real-time Retrieval and Inference at Scale: Rather than separating indexing, ranking, and inference across multiple systems, Vespa performs real-time machine-learned ranking and model inference where the data lives. This means you can serve fresh, personalized results with predictable sub-100 ms latency even for large datasets.

-

Multi-Phase Ranking and Custom Logic: Vespa lets you embed custom ranking logic, including ML models like XGBoost, directly into your search pipeline using ONNX. You can combine relevance signals, business rules, and semantic vectors in multi-stage ranking to fine-tune which results surface first.

-

Massive Scalability with High Throughput: Designed for real-world, high-traffic applications, Vespa can scale horizontally across clusters, handling billions of documents with sub-100ms query latency and up to 100k writes per second per node.

-

Multi-Vector and Multi-Modal Retrieval: Vespa natively handles multiple vectors per document, with support for token-level embeddings, ColPali-based visual document retrieval, and tensor-based computations for precise, cross-modal relevance and ranking.

GigaOm recognized Vespa as a leader in vector databases for two consecutive years, noting its performance advantages over alternatives like Elasticsearch, up to 12.9X higher throughput per CPU core for vector searches.

How Nexla and Vespa Work Together

The Nexla-Vespa partnership removes one of the hardest parts of AI systems: getting clean, well-modeled data into a high-performance retrieval engine, continuously.

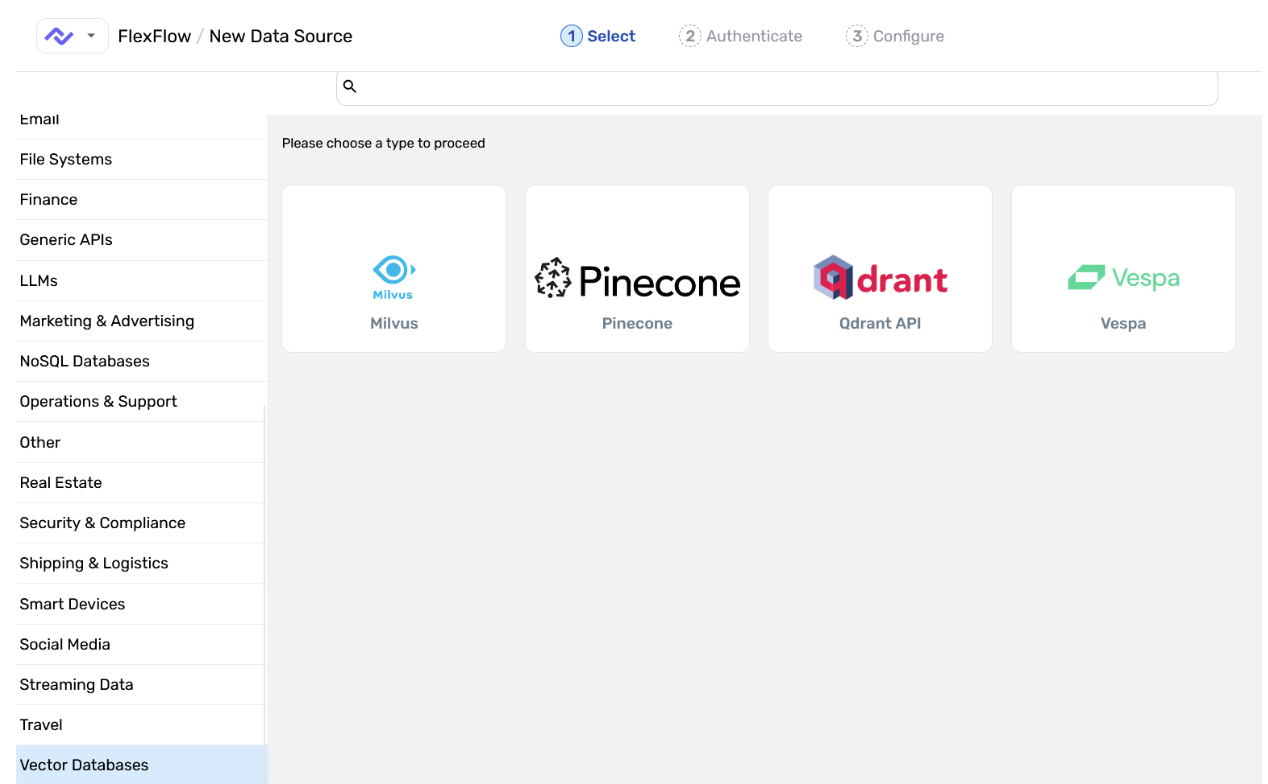

Nexla recently launched a Vespa connector that makes data integration with Vespa seamless. The integration includes:

Vespa Connector in Nexla:

Handles all data piping from sources like Amazon S3, PostgreSQL, Pinecone, Snowflake, and others directly into Vespa:

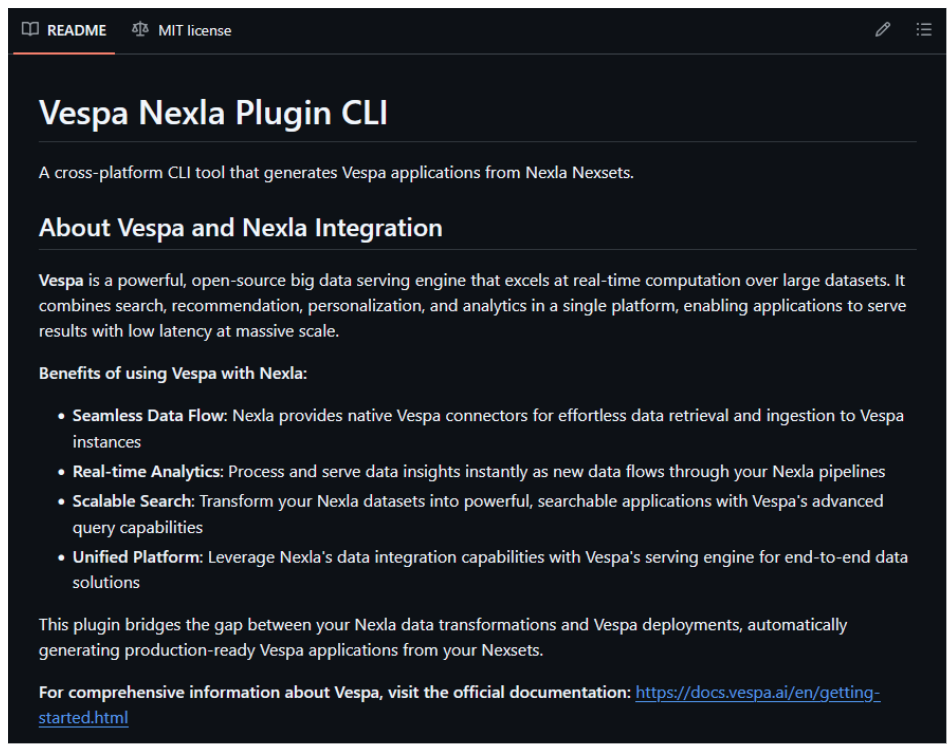

Vespa Nexla Plugin CLI: Automatically generates draft Vespa application packages (including schema files) directly from a Nexset, eliminating manual configuration:

This means you can move data from S3 to Vespa, migrate from Pinecone to Vespa, or sync PostgreSQL to Vespa, all without writing a single line of code.

When Nexla Clients Should Use Vespa

You’re a Nexla client. Use Vespa when you need:

Advanced AI search and RAG applications: If you’re building intelligent search, recommendation systems, or RAG applications that require hybrid search (combining semantic vector search with keyword matching and metadata filtering), Vespa is purpose-built for this. Nexla gets your data into Vespa, while Vespa delivers production-grade AI search with machine-learned ranking.

Real-time, high-scale query performance: When you need to serve thousands of queries per second across billions of documents with sub-100ms latency, Vespa’s distributed architecture scales horizontally without compromising quality. Nexla ensures your data flows continuously into Vespa with incremental updates and CDC support.

Complex ranking and inference: If your use case requires multi-phase ranking, custom ML models, or LLM integration at query time, Vespa executes these operations locally where data lives, avoiding costly data movement. Nexla prepares and transforms your data into the exact schema Vespa needs.

Cost efficiency at scale: Vespa delivers 5X infrastructure cost savings compared to alternatives like Elasticsearch while handling vector, lexical, and hybrid queries. Nexla minimizes integration costs by automating pipeline creation and schema management.

When Vespa Clients Should Use Nexla

You’re a Vespa client. Use Nexla when you need:

Multi-source data consolidation: Vespa is your search and inference engine, but data lives everywhere, S3 buckets, PostgreSQL databases, Snowflake warehouses, Salesforce CRMs, APIs, and files. Nexla connects to 500+ sources with bidirectional connectors and consolidates data into Vespa without custom ETL scripts.

Automated schema generation and management: Instead of manually writing Vespa schema files and managing schema evolution, Nexla’s Plugin CLI auto-generates schemas from your Nexsets. As source schemas change, Nexla’s metadata intelligence detects changes and propagates them downstream automatically.

Data transformation and enrichment: Before data hits Vespa, it often needs cleaning, filtering, enrichment, or format conversion. Nexla provides a no-code transformation library and supports custom SQL, Python, or JavaScript, all without maintaining separate ETL infrastructure.

Vector database migration: Moving from Pinecone, Weaviate, or another vector database to Vespa? Nexla handles the migration with zero code, extracting records, transforming data to match Vespa’s schema, and syncing documents continuously.

Data quality and monitoring: Nexla continuously monitors data flows with built-in validation rules, error handling, and automated alerts. When data quality issues arise, Nexla quarantines bad records and provides audit trails, ensuring Vespa always receives clean, trustworthy data.

Real-time and streaming pipelines: Vespa supports real-time updates, but getting real-time data from streaming sources (Kafka, APIs, databases with CDC) requires integration logic. Nexla handles streaming, batch, and hybrid integration styles, optimizing throughput and latency for each source type.

Conclusion

Nexla solves data readiness.

Vespa solves intelligence and precision at scale.

Together, they give teams a clean, practical path from raw enterprise data to real-time AI applications. Vespa gives you production-grade vector search, hybrid retrieval, and RAG capabilities at any scale. Nexla eliminates months of pipeline development and makes multi-source data flows conversational.

Ready to explore?

Start at express.dev for conversational pipeline building, or explore the Vespa connector in Nexla’s platform to see how quickly your data can power real AI applications.