How Perplexity beat Google on AI Search with Vespa.ai

Perplexity recently made the search service they have developed on Vespa.ai to power the Perplexity RAG application available to third parties.

As part of this, they demonstrate the quality of their results using a quality evaluation tool they have made available open source. It shows once again how the quality of Perplexity as a RAG solution can be traced back to the quality of the search results they achieve:

Perplexity determined that a retrieval system for high-quality RAG needs to satisfy these criteria:

- Completeness, freshness, and speed: the search index must be comprehensive, constantly updated, and engineered for realtime latency.

- Fine-grained content understanding: AI model brittleness and limited context windows require search results to be presented and ranked at the most granular level possible.

- Hybrid retrieval and ranking: both the retrieval and ranking pipelines must incorporate lexical and semantic signals for the results to be useful for AI.

They found that neither traditional search engines nor vector databases were up for the task:

Finally, we observed the AI ecosystem bifurcate between lexical search and semantic search, with systems emerging that were over-optimized for one modality over the other. We saw the rise of vector databases that couldn’t adequately support even simple boolean filters. Traditional search backends fared little better, with primitive support for the semantic ranking and retrieval opportunities unlocked by AI.

In the rest of this post we’ll explain the main differentiators of Vespa that let it deliver on all these criteria.

Completeness, freshness, and speed

Completeness means indexing all the information that may be relevant to any task the AI is intended to solve. In Perplexity’s case, this means indexing a large high-quality selection of web pages, while in an enterprise, it means indexing large amounts of internal documents and data accumulated over many years. In any case, it means that indexes become large - hundreds of millions to billions of documents, with tens of vector embeddings each are not uncommon.

Vespa solves this by automatically, transparently and dynamically distributing content over many nodes, and co-locating all information, indexes and computation over the same entities on the same node to distribute load and avoid bandwidth bottlenecks. Application owners just specify the resources available to the cluster - the rest happens automatically.

Freshness - keeping content and signals up to date with what’s happening - means that the system must be able to apply a continuous stream of updates in real time at large scale, without impacting query performance. This is where Vespa’s unique index technology which is able to cheaply mutate index structures while they are read comes into play. Read more about how this works and how it can deliver the combination of performant search and high-throughput real-time writes in our technical report comparing ElasticSearch and Vespa.

While Vespa can update both vector and full-text indexes in true real time, this is of course more costly than updating simple structured fields. That is why Vespa also supports partial updates, which allow individual fields to be updated without impacting other fields or their indexes. This allows metadata and signals to be updated independently at high continuous rates, which is crucial for e.g., behavior signals, or price changes in e-commerce.

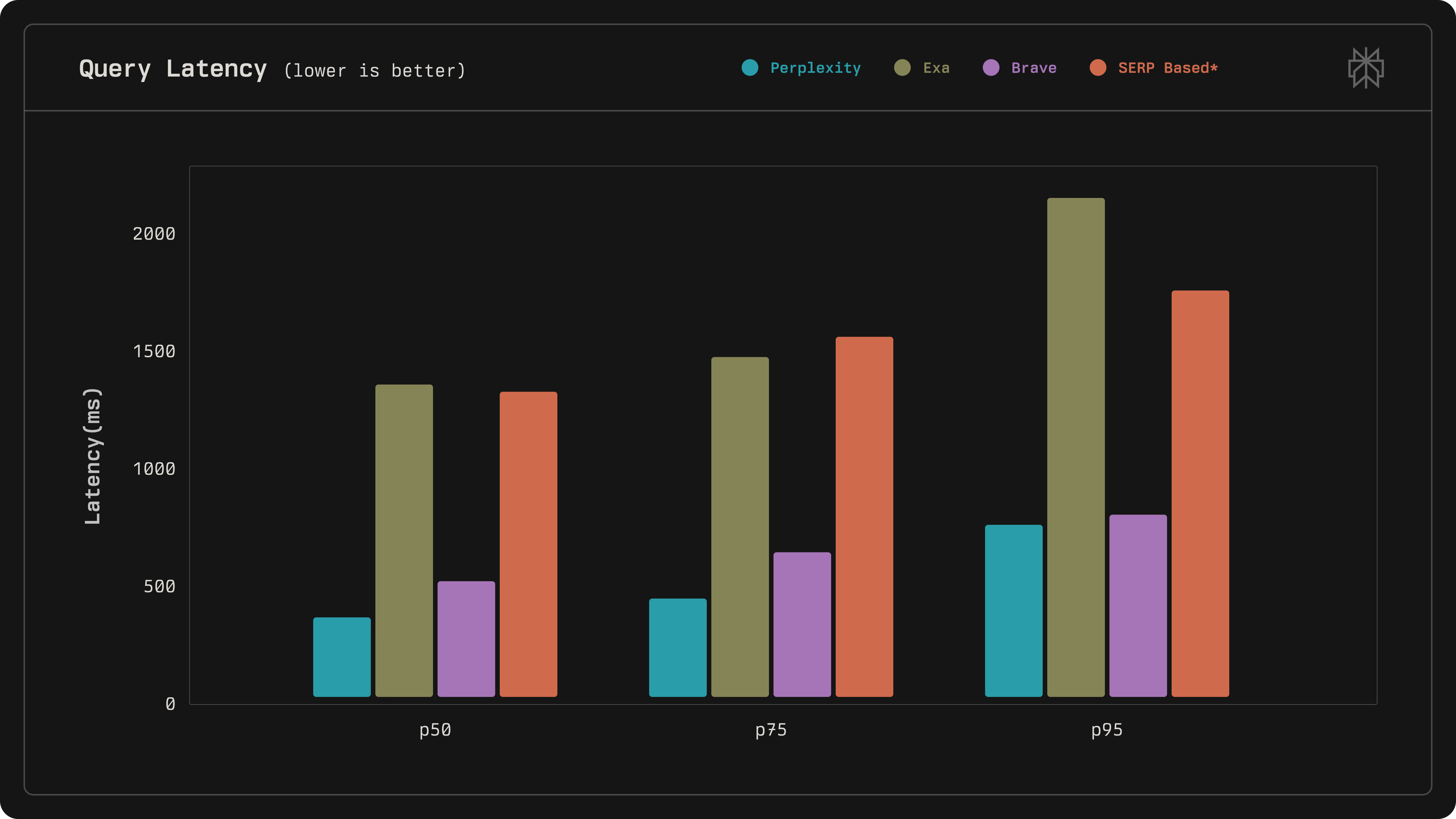

Speed is all about delivering results with the minimal possible latency. Perplexity shows they are beating the competition handily on this front:

Achieving low latency in toy systems doing simple operations like looking up vectors by approximate nearest neighbour is quite easy. However, doing this in real systems means combining filters, hybrid matching, machine-learned ranking, and more, at scale with handling continuous writes, and this is a very different matter.

This is where Vespa’s deep technology stack with decades of performance work, ranging from hardware-near C++-level optimization to algorithm innovations and high-level architecture design, really shines.

Fine-grained content understanding

In most respects it makes no difference if the consumer of a search service is a human or an LLM, the goals are still relevant results at scale with great performance. However, the LLMs lack of a continuously learned background context in combination with their much higher reading speed means a different approach to presenting results is in order. As Perplexity puts it:

In a world of AI agents, a search API must also carefully curate the context provided to developers’ models to ensure those models perform optimally and incorporate maximally accurate information. Effective context engineering requires the API to not only capture document-level relevance, but to treat the individual sections and spans of documents as first-class units in their own right.

That is, both documents and their internal content pieces (chunks) must be selected by relevance to provide efficient and effective content for the LLM. This is where Vespa’s unique layered ranking comes into the picture: Rather than returning all the content of the most relevant documents, Vespa can also score the individual chunks by relevance to only return the most relevant pieces of the most relevant documents.

While it is possible to perform the chunk selection on the complete data returned from a search, this is inefficient at scale, to the point of often not being practical at all with the kind of very large documents often seen in domains like finance and legal RAG applications.

Hybrid retrieval and ranking

Hybrid search means that content is retrieved and scored by both considering vector embeddings and full-text matching and relevance. Done right, this combines the strength of both approaches and avoids the weaknesses: Vector search lets you find content by matching at the semantic level but lacks preciseness, while full-text search lets you match precisely, including with rare terms such as e.g. person names and internal company monikers.

While ranking by vector closeness is quite simple, making good use of the information produced from lexical matching requires advanced ranking features far beyond simple features like bm25. In addition, metadata and signals are incorporated to truly elicit the best possible information from all the available content:

the ranking stack is designed to leverage the rich signal produced by the millions of user requests we serve each hour of the day

Combining all these signals to make a final selection of content requires machine-learned models, and trading off accuracy and computation cost:

We then employ multiple stages of progressively advanced ranking. Earlier stages rely on lexical and embedding-based scorers optimized for speed. As the candidate set is gradually winnowed down, we then use more powerful cross-encoder reranker models to perform the final sculpting of the result set.

Vespa provides all the necessary features to do all of this at large scale, integrated in a single application, such as Hybrid retrieval, advanced text ranking, multi-stage ranking with machine-learned models and cross encoders.

Putting it all together

It’s not just web search APIs that need quality, scale, and performance to be useful in RAG systems; it’s even more important for enterprise use cases where the stakes are higher. We have put together The RAG Blueprint to give everybody a starting point for developing solutions with the same level of quality for their own use cases. With the blueprint, you can have your own application up and running in a few minutes, and then iterate to make it yours while knowing you’ll have the foundation in place to deliver the quality, scalability and performance of the likes of Perplexity for your use case.