Clarm: Agentic AI-powered Sales for Developers with Vespa Cloud

Overview

Clarm helps open source software companies convert GitHub stars into revenue through AI-powered lead generation, content production, and developer support automation. When building their platform, Clarm needed a search engine that could power accurate, zero-hallucination AI responses while handling complex enrichment across millions of GitHub data points. They chose Vespa for its unified text, vector, and structured search capabilities and were able to deploy to production in under a day.

The Problem: Software / OSS Companies Struggle to Monetize

“Most OSS founders can’t get attention for their software initially. They’re so focused on building the product that marketing, SEO, and content creation get dropped. We built Clarm to automate all the growth work founders drop so they can focus on git commits,” explains Marcus Storm-Mollard, founder and CEO of Clarm.

The challenge is fundamental: 99% of successful open source is funded by businesses paying for solutions, but early-stage OSS companies lack the infrastructure to identify, engage, and convert those potential paying customers. They have thousands of GitHub stars but no clear path to revenue.

Clarm addresses this through three product pillars:

-

Lead Generation & Prospecting: The killer feature. Clarm takes repo data from customers and competitors, enriches it with signals from website visits, commits, issues, and community engagement, then ranks and identifies good-fit prospects and potential enterprise buyers.

-

Marketing & Content Production: Automated content creation from commits, PRs, and codebase analysis, helping OSS companies maintain consistent technical marketing.

-

Developer Support Automation: AI-powered support across Discord, Slack, GitHub Issues, and websites, with deep integrations and analytics for scaling customer success.

The Search Challenge

At the core of all three pillars sits a critical technical requirement: accurate, explainable search and retrieval.

“We realized early that search, not generation, was the real problem to solve. Generating LLM answers isn’t hard. Finding the right information to base them on is everything,” Marcus notes.

Clarm needed a search engine that could:

- Handle hybrid retrieval (combining text search, vector embeddings, and structured filters)

- Power zero-hallucination AI responses grounded in verifiable context

- Process and rank millions of GitHub data points in real-time

- Support complex multi-signal enrichment for lead scoring

- Scale cost-effectively on a startup budget

Traditional vector databases like Supabase or search engines like Elasticsearch couldn’t deliver the unified, production-grade retrieval required for Clarm’s zero-hallucination architecture.

The Solution: Vespa’s Production-Grade Hybrid Search

Marcus discovered Vespa after researching how companies like Perplexity and Onyx built their advanced retrieval systems.

“We really liked that Vespa started as a search engine and evolved into a vector-based system. It made so much sense for what we were building. Vespa’s ranking and tensoring are built in, so we know our results are accurate and relevant right out of the box,” Marcus explains.

Rapid Deployment: Less Than One Day to Production

Clarm began experimenting with Vespa’s Docker image for local development, then transitioned to Vespa Cloud for production deployment during their Y Combinator batch.

“It took about half a day to set up how we wanted it. That speed of onboarding made a huge impact during YC. We just deployed it, and it worked,” Marcus recalls.

The quick deployment was critical. Clarm was racing toward Demo Day and couldn’t afford weeks of infrastructure setup. Vespa’s unified approach eliminated the complexity of stitching together multiple systems for text, vector, and structured search.

Key Vespa Capabilities Powering Clarm

- Unified Retrieval Pipeline

- Single query endpoint combining text search, vector similarity, and structured filters - no need to orchestrate multiple databases or services.

- Built-in Ranking & Tensor Operations

- Native support for complex ranking models and tensor operations means Clarm can implement sophisticated lead scoring without custom ranking layers.

- Real-Time Indexing

- GitHub events, user interactions, and enrichment signals are instantly searchable, enabling live lead intelligence and up-to-date AI responses.

- Scalable Cloud Deployment

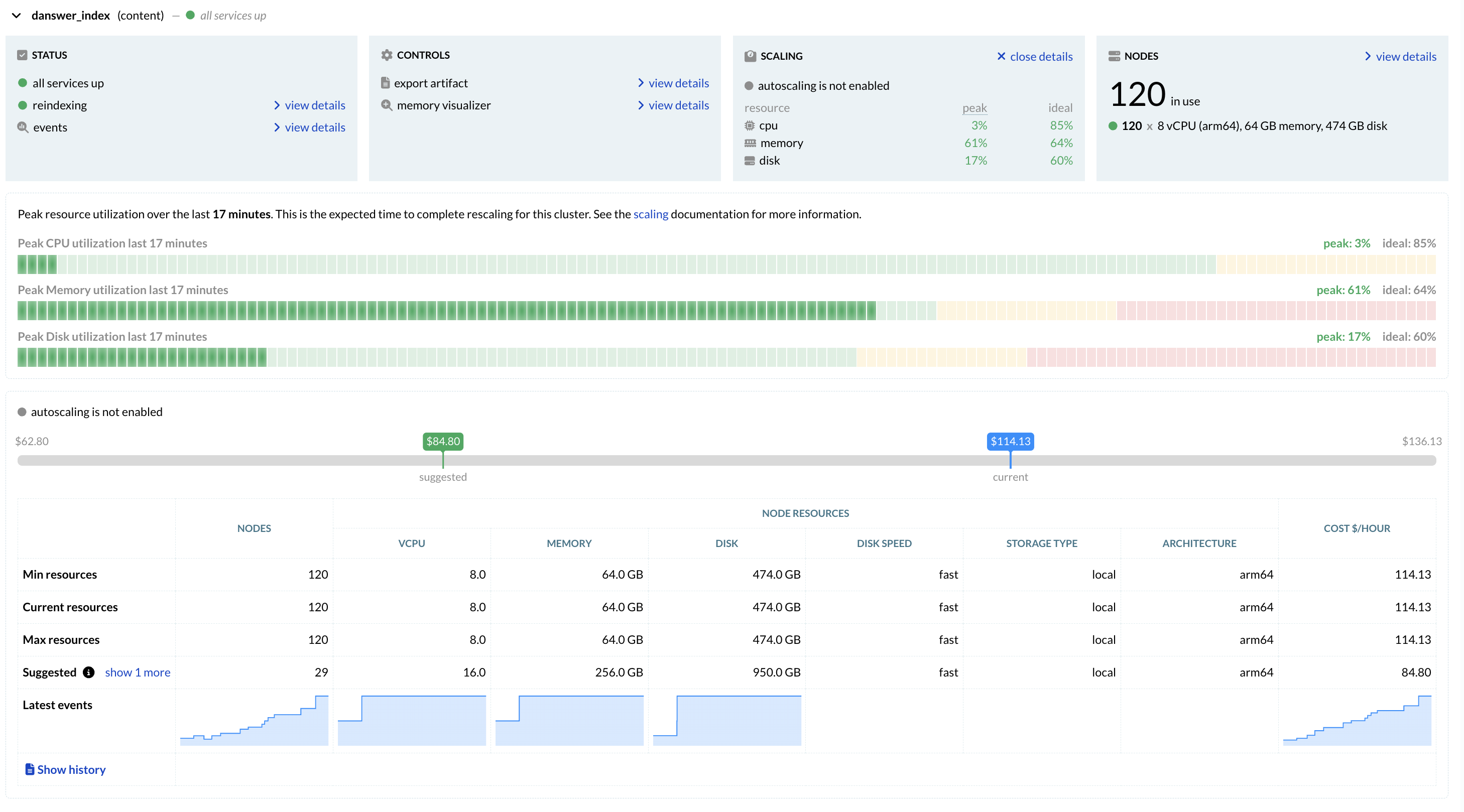

- Automatic scaling and high availability handled by Vespa Cloud, allowing Clarm’s two-person engineering team to focus on product features instead of infrastructure operations.

- Developer-Friendly Architecture

- Docker-based local development, straightforward schema design, and comprehensive documentation enabled rapid prototyping and iteration.

The Results

Clarm’s decision to build on Vespa Cloud delivered immediate impact:

- <1 Day to Production: From prototype to live search infrastructure deployed during YC

- Zero-Hallucination Architecture: Accurate retrieval enabling trustworthy AI responses grounded in verifiable data

- High-Quality Lead Intelligence: Sophisticated ranking of GitHub data points across 50K+ collective stars from customers like Better Auth (23.3K stars) and Cua (11.3K stars)

- Exceptional Support: Direct collaboration with Vespa’s engineering team throughout development

“The setup was easy, the support from the Vespa team was incredible, and everything just worked. We didn’t need to look anywhere else,” Marcus emphasizes.

Customer Success: GitHub Stars Becoming Revenue

Clarm’s customers are seeing measurable results from the AI-powered lead generation platform:

- Better Auth: Grew from 8K to 23.3K GitHub stars in 3 months with Clarm’s lead gen and engagement automation

- c/ua: Scaled from 5K to 11.3K stars while identifying and converting enterprise prospects

- Skyvern AI: after struggling with after hitting 19k stars, reduced support workload by 94% with Clarm across Github, Discord, and Slack

- Engagement Depth: Developers “pair programming” with Clarm’s AI agents for extended sessions, sending thousands of queries a day and sessions lasting up to 22 hours

What’s Next: Building the Future of OSS Monetization

Clarm represents a new category of growth infrastructure built specifically for software and open source companies. By combining Vespa’s production-grade retrieval with their own zero-hallucination agent framework, Clarm is proving that AI-powered sales and marketing can be trustworthy, explainable, and grounded in truth.

“We’re focused on proving product value and retaining customers right now. Everything depends on us growing our customers’ MRR and showing software and OSS companies they can build sustainable businesses,” Marcus shares.

That focus is reflected in Clarm’s positioning: “You build awesome software. Now build a business.” It resonates with software founders who want to monetize without compromising their community values. By recognizing that a vast majority of successful open source is ultimately funded by businesses paying for solutions, Clarm offers a clear path forward: free software for the community, paid solutions for enterprises.

Conclusion

Clarm’s architecture reinforces a lesson many teams learn the hard way: LLMs are only as reliable as the retrieval systems behind them. By treating retrieval as a first-class system, built on Vespa Cloud, Clarm unified text search, vector similarity, structured filtering, and ranking into a single production-grade platform, eliminating the fragility and guesswork common in vector-only stacks.

The result is an agentic AI platform that can reason over live data, explain its outputs, and scale predictably without stitching together multiple databases or post-hoc ranking layers. This foundation enabled a small team to move from prototype to production in days, operate across millions of GitHub signals, and help open source companies turn community adoption into sustainable revenue.

More importantly, Clarm’s success offers a blueprint for any organization building serious AI applications: when retrieval is reliable, ranking is expressive, and data is always fresh, AI systems become trustworthy enough to power real business outcomes. Clarm is building the future of OSS monetization, and Vespa is the retrieval engine making it possible.