Adaptive In-Context Learning 🤝 Vespa - part one

Photo by Pau Sayrol on Unsplash

Large Language Models (LLMs), such as GPT-4, are few-shot learners whose accuracy improves with the addition of examples in the prompt. In this series we explore this concept, which is known as In-Context Learning (ICL), and how we can use Vespa’s capabilities to adaptively retrieve context sensitive examples for ICL.

Large Language Models (LLMs) and In-Context Learning

In-context learning can be confusing for those with a Machine Learning (ML) background, where learning involves updating model parameters, also known as fine-tuning. In ICL, the model parameters remain unchanged. Instead, labeled examples are added to the instruction prompt during inference.

Let us explain the differences with a small use case; Imagine that you want to build a service that categorizes online banking support requests. The first step for both classic ML and ICL would be to gather labeled data, supervised examples of support texts and their correct category. A concrete labeled example could look like this:

text: can i use my mastercard to add money to my account?

category: supported_cards_and_currencies

Then you gather a large number of such labeled examples.

With a classic ML approach, you would train a model (e.g, BERT), by fine-tuning the model using the labeled examples, then you would deploy the model to categorize previously unseen inputs and evaluate accuracy.

You likely want to build a data flywheel so that you can retrain the model to avoid accuracy degradation over time. Degradation happens when the input texts change over time and the examples the model was trained on doesn’t fit. A fancy word for this in ML circles is distribution shift.

That doesn’t sound too complicated in a few sentences, but also has a few challenges in practise.

- Model serving in production is isolated from training, requiring different infrastructure for both serving and training.

- There is a clear differentiation between real-time serving with low latency and training the model.

- There is a delay in the data flywheel loop (for re-training the model)

- The model is specialized for one specific task.

In-context learning (ICL) with foundational models offers an alternative where, instead of fine-tuning, you add examples to the prompt at inference time. Here is one example of a prompt with two examples from the training set.

Please categorize the input text. Be a good LLM.

Examples:

text: can i use my mastercard to add money to my account?

category: supported_cards_and_currencies

text: When will the check I deposited post to my account?

category: balance_not_updated_after_cheque_or_cash_deposit

Think this through step by step and categorize this

text: Why isn't my deposit in my account?

category:

This prompting technique is known as k-shot ICL where k could be in zero to many. With increasing context window sizes, models can fit many more examples.

The benefits compared to the classic ML pipeline are many:

- It’s simple.

- The instructions are in plain language.

- The model is frozen, so there is no model retraining(s) from scratch

- Allows introducing new categories, generally labels, without retraining from scratch

- No need to manage model training infrastructure, just serving (or use a commercial LLM API).

- We can use the same model for many different tasks by changing the prompt

The downside is inference related cost, compared to specialized models.

Context sensitive ICL with Vespa

At inference time, we want to identify the labeled training examples with the most significant impact on the accuracy of the downstream task. This process can be formulated as an information retrieval problem where the unlabeled input example is the query, and the labeled training example is our corpus.

Instead of a fixed static set of examples always added to the prompt, we move to context-sensitive or adaptive examples that LLM prompt. LLMs still have limited context window sizes, meaning that in cases where the label space is large, it is impossible to fit all our examples into the prompt. By using retrieval, we can select the most appropriate examples dynamically.

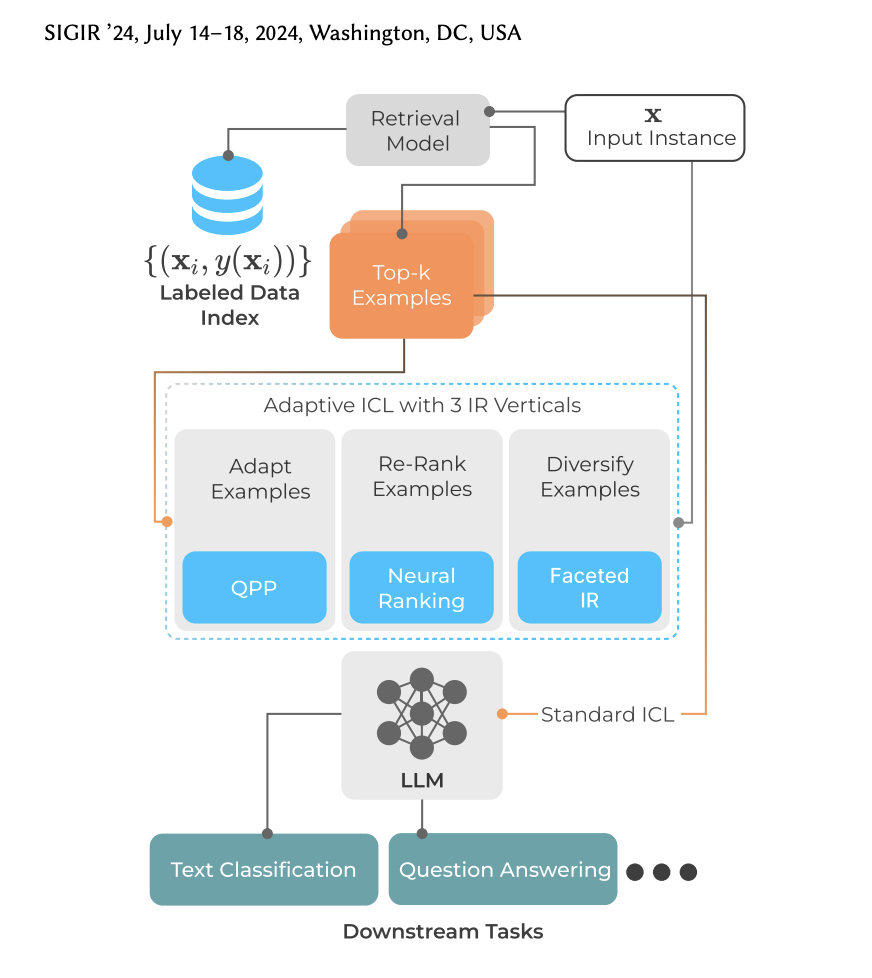

Figure from “In-Context Learning” or: How I learned to stop worrying and love “Applied Information Retrieval”

The perspective paper from SIGIR presents three well-known retrieval techniques for adaptive ICL:

- Query performance prediction (QPP) which is not about latency but about predicting if a ranked list of documents is useful to the query. A simple variant of this is to learn a semantic cutoff threshold.

- Neural ranking, including bi-encoders (embeddings), colbert, or cross-encoders that can be expressed in a phased ranking pipeline.

- Facets, or grouping, for diversifying the retrieved documents (examples). We can group the examples by the labels so that we can add a diverse set of examples to the prompt. Grouping can also restrict the number of examples per label.

In addition to similarity, there is also dissimilarity, which can help demonstrate the boundaries between the labels.

Summary

We believe that using retrieval and ranking for ICL is a promising direction since it simplifies the overall ML pipeline. In a way, it democratizes ML, making it more accessible for more organizations.

Maybe the most exciting thing about adaptive ICL is that the prediction forms a new training example, which is indexed into Vespa, creating a data flywheel where the new example generated by the model can be used to aid new inferences and optionally verified by a human-in-the-loop. The training example retrieval phase can use a filter to remove non-verified examples.

In the next post in this series, we will demonstrate this technique on a classification dataset with large label space (77 intent categories), where we achieve high accuracy using adaptive ICL comparable to specialized models.